Cutmix As A Strong Regularizer

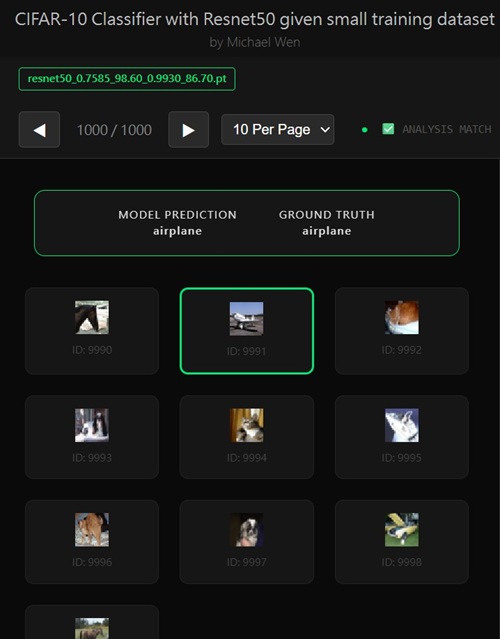

I wrote an app to classify an object using transfer learning, and the model was trained on CIFAR-10 dataset, all by myself.

CutMix as a Strong Regularizer

CutMix is often described casually as “cut a patch from one image and paste it into another.” That description is technically correct — but wildly undersells what CutMix actually does to the training objective. CutMix is not just data augmentation. It is a label-space and input-space regularizer at the same time.What CutMix Does at a High Level

Given two training samples:(x₁, y₁), (x₂, y₂)CutMix constructs a new sample:

x̃ = M ⊙ x₁ + (1 − M) ⊙ x₂ ỹ = λ y₁ + (1 − λ) y₂Where:

Mis a binary spatial mask (a rectangle)λis the area ratio of the masky₁, y₂are one-hot or smoothed labels

Why CutMix Is Stronger Than Random Erasing

Random Erasing removes information. CutMix replaces information with structured, meaningful content. This forces the model to:- Recognize multiple objects in one image

- Associate spatial regions with different labels

- Learn part-to-label consistency

How CutMix Changes the Loss Function

Standard cross-entropy:L = − Σ yᵢ log pᵢWith CutMix, the target is no longer discrete:

L = − [ λ log p(y₁ | x̃) + (1 − λ) log p(y₂ | x̃) ]This has two important effects:

- Gradients are shared across classes

- Overconfident predictions are penalized

Effect on Decision Boundaries

Without CutMix, CNNs tend to learn brittle, localized boundaries:- Strong reliance on single object parts

- High confidence predictions

- Poor extrapolation

∂L / ∂logit ≠ 0 for multiple classesThis means:

- The model must distribute probability mass

- Decision boundaries become smoother

- Small perturbations no longer flip predictions

Why CutMix Often Improves Validation Accuracy

CutMix is particularly effective when:- The dataset is small

- Classes share visual features

- Overfitting is the main issue

- Training accuracy (slightly)

- Generalization gap

Why CutMix Can Hurt Transfer Learning

In transfer learning, the backbone is pretrained to recognize whole objects. CutMix breaks that assumption. Potential failure modes:- Pretrained filters expect coherent objects

- Mixed spatial semantics confuse mid-level features

- Early unfreezing amplifies gradient noise

Bias ↑ Variance ↑If model capacity is not constrained, the network simply memorizes mixed patterns.

CutMix and Fine-Tuning Stages

CutMix works best when:- Applied after classifier convergence

- Most backbone layers are frozen

- Learning rates are low

- Stage-1: no CutMix

- Stage-2: mild CutMix (small patches)

- Late training: optional increase in probability

CutMix vs MixUp

- MixUp blends pixels globally

- CutMix blends pixels spatially

- CutMix preserves local textures

- MixUp enforces global linearity

When to Use CutMix

CutMix shines when:- Validation accuracy has plateaued

- Training accuracy is too high

- Data diversity is limited

- The backbone is still adapting

- The dataset is extremely small

- Objects occupy most of the image

Key Takeaways

- CutMix is a label-aware strong regularizer

- It reshapes the loss, not just the inputs

- It smooths decision boundaries

- Timing matters more than strength

- In transfer learning, less is often more

Any comments? Feel free to participate below in the Facebook comment section.